Security Review: Smashing abstract—more on Lab 2

I was lost at first when starting Lab 2, as I had little to no eperience with web programming. After floundering around for a few hours I got a better idea of what we were supposed to be doing and with the XSS cheat sheet was able to rapidly discover appropriate exploits for each of the filter versions on the mock search engine (except #5, of course).

Once I’d satisfied myself that I could get all the cookies I wanted I immediately launched into a more thorough investigation of the environment I had been working with, and began discovering real vulnerabilities. I was excited by the prospects available and decided to make a security review out of it. I spent the next couple days experimenting, then jumped onto the blog to write my security review only to find that two of my classmates had addressed the same topic the day before. Eriel Thomas addressed the security of the server at yoshoo.cs.washington.edu in his post “Smashing the Lab for Fun and Profit”, whereas David Balatero discussed his success in phishing about a third of the security class (including me… ouch) in “UW CSE Resources”. Just goes to show you that you should always examine links, even from trustworthy and computer savy friends :P.

I nearly despaired at several days’ work gone for naught, but after carefully reading both of the posts I believe that I still have something to contribute. My discussion will focus a bit more on the security of abstract and provide other additional details.

You cannot take what I have rightfully stolen!

One of the requirements for Lab 2 was that all students’ scripts must run on their space on abstract.cs.washington.edu. According to the abstract account documentation all CGI scripts on abstract should be executed with the suEXEC module, which should mean that they have all the same privileges (and limitations) that you do the same machine. That may or may not be the case (I haven’t tested those limits yet), but I do know that all the files I observed created by CGI scripts on abstract were owned by the user apache and had world readable permissions. You may not be able to ls /homes/abstract/someuser, but since you know something about the assignment and its requirements you can probably make very accurate guesses most of the time, looking for files like authtokens.txt, README(.txt), etc. The implications are obvious. As soon as I realized this I took steps to make sure I wasn’t vulnerable to the outright theft of stolen cookies, and no longer kept that sort of information in world readable files. Now I was safe, right?

Close the barn door, the horses are missing!

I then learned that the server logs for abstract are also readable by anyone able to authenticate with a CSE NetID and thought “Well, that just tears it.” I knew it was too late to protect myself, but it turns out that having complete access to the logs on abstract doesn’t carry quite the same set of risks as having access to the logs on yoshoo. If you handled things the right way from the beginning I believe that it’s possible to be safe from the depredations of any attackers that don’t have access to yoshoo, and I’ll describe how.

I, and many other people, began with hand-crafted injections even if we did script it later, which meant going for injections that were as compact as possible. For me, new to Javascript, this meant just making use of an injected document.location mutation. This is, unfortunately, an HTTP GET and meant that my requests (stolen cookies and all) were visible to anyone reading the abstract logs. The solution is not to use GET, which is a bit of a problem since all of the easiest, most compact ways to push the document cookie out to an attacker involve GET. However, you can use your inject ability to write a hidden form into the page and auto-submit it using POST. I won’t go into the exact details, since this lab might get re-used in the future. Then the stolen cookie never becomes a matter of public record, and your grades in the database are safe. But only if you’d used POST from the get-go.

The abstract server logs are quite the leak though. As Eriel mentioned with when talking about the requests to the girlscout.php script, you can read all the cookies in the log and use those to change other students’ grades, and read the urls and parameters of their cookie-logging scripts to add massive amounts of plausible chaff to their take. Reading the logs can also allow you to take someone’s y.um.my cookie. Eriel mentioned the possibility of adding chaff that way, but you could also use that to make a distributed (amongst IDs, not machines) denial of service attack by posting many different links to many different y.um.my accounts.

Where do we go from here?

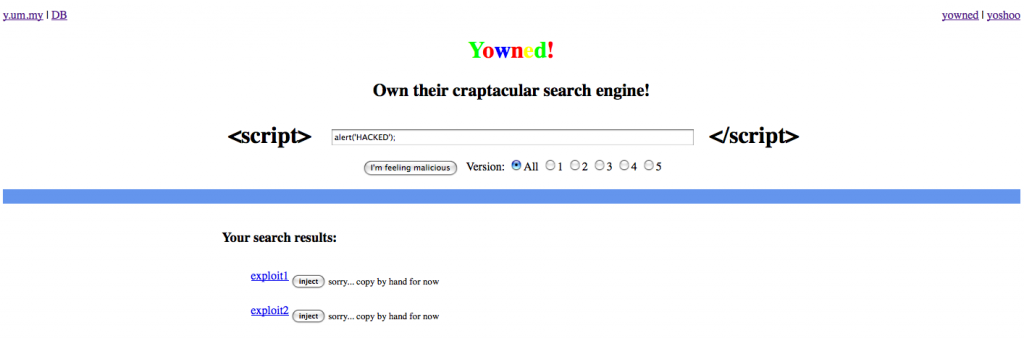

I had earlier built an injection generator (visible below) capable of embedding user-chosen javascript through any of the five yoshoo filters. Despite all my work I still felt that I may have simply been too late for a security review on this topic, so I grabbed my generator and set to experimenting again to look for more vulnerabilities.

Yowned!

When I discovered the abstract logs I also made a proof-of-concept script to act as a search engine over authtokens. I first filtered all the abstract logs from the last month to discard irrelevant requests (real cookies, etc) and made my own copy to make the job of searching easier. The regular structure of the logs makes them wonderfully amenable to that sort of perusal, but after some consideration I decided not to include that capability in the portal seen above.

One possibility that had occurred to me, like Eriel, was to make my own cookies. I was also frustrated by salted hashes, and decided to see if I could get source code for the opaque scripts that were ruining my game. Since I was already using hidden forms that auto-submitted values I set, I thought I might be able to make use of the type="file" input tag with an auto-filled value. Here, at least, a small ray of sunshine shines on an otherwise bleak field of browser and server vulnerabilities: I discovered that no popular browser but Opera (for varying values of “popular”) will incorporate a pre-filed value into a type="file" input tag, and even Opera asks for confirmation. The bot on yoshoo was running Firefox 3, so it was safe. More the pity for me… That could have been fun.

In conclusion: This lab was a lot of fun. The unintentional security flaws (from the framework they had to work with, not the course staff’s fault) were even more fun than the XSS sandbox we had to play in. If possible at least the yoshoo log visibility issue that Eriel mentioned should be fixed because it gives away just too much. It might be fun though, to add another dimension to this assignment by in some way factoring in the sort of vulnerabilities that are endemic to the sort of exposure your work gets on abstract. That way the lab would have elements of both offensive and defensive security. I don’t think this sort of vulnerability is the end of the world for an assignment like this. After all it seems that I and others were restrained by ethics and not technical limitations from wreaking havoc.

Comment by oterod

February 14, 2009 @ 9:04 pm

Haa! Awesome. Sooo awesome.